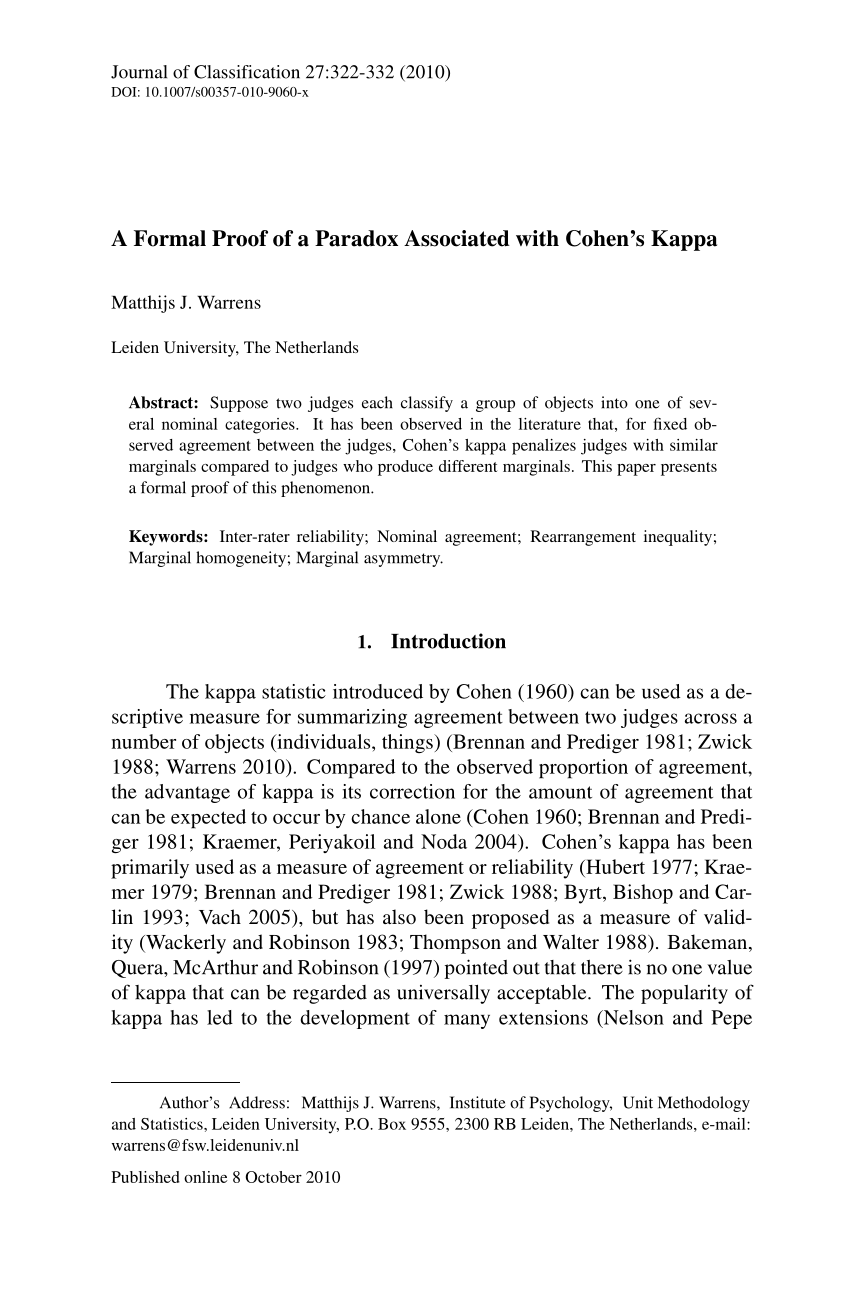

PDF) Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification (2020) | Giles M. Foody | 87 Citations

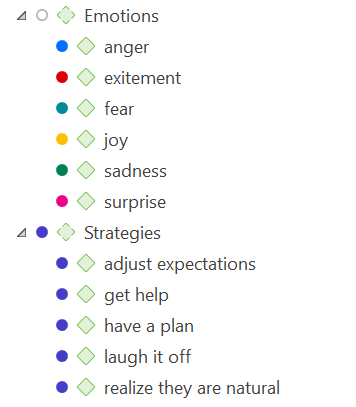

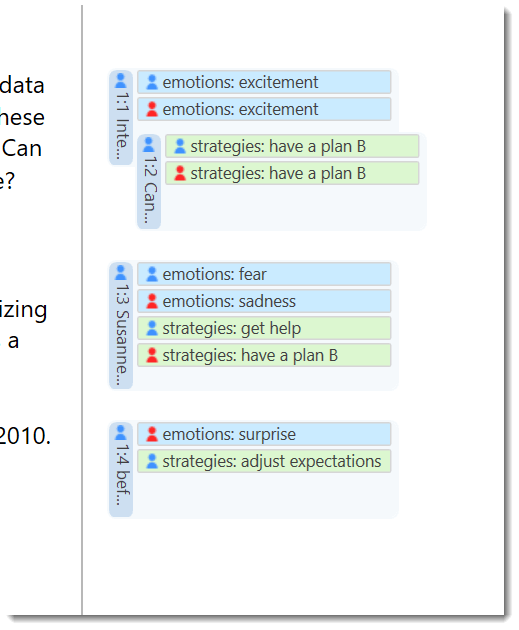

Medición del Acuerdo entre Codificadores: Por qué el Kappa de Cohen no es una buena opción - ATLAS.ti | El software nº 1 para el análisis cualitativo de datos

Comparing dependent kappa coefficients obtained on multilevel data - Vanbelle - 2017 - Biometrical Journal - Wiley Online Library

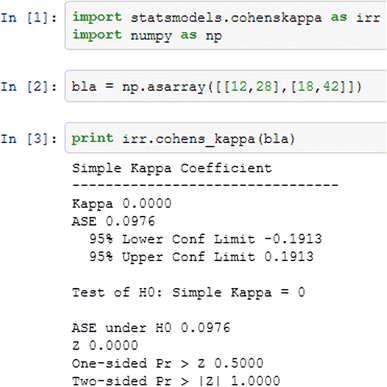

Medición del Acuerdo entre Codificadores: Por qué el Kappa de Cohen no es una buena opción - ATLAS.ti | El software nº 1 para el análisis cualitativo de datos

Inter-observer variation can be measured in any situation in which two or more independent observers are evaluating the same thing Kappa is intended to. - ppt download

![PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/6d3768fde2a9dbf78644f0a817d4470c836e60b7/4-Table2-1.png)

PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar

PDF) Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification (2020) | Giles M. Foody | 87 Citations

PDF) Free-Marginal Multirater Kappa (multirater κfree): An Alternative to Fleiss Fixed-Marginal Multirater Kappa

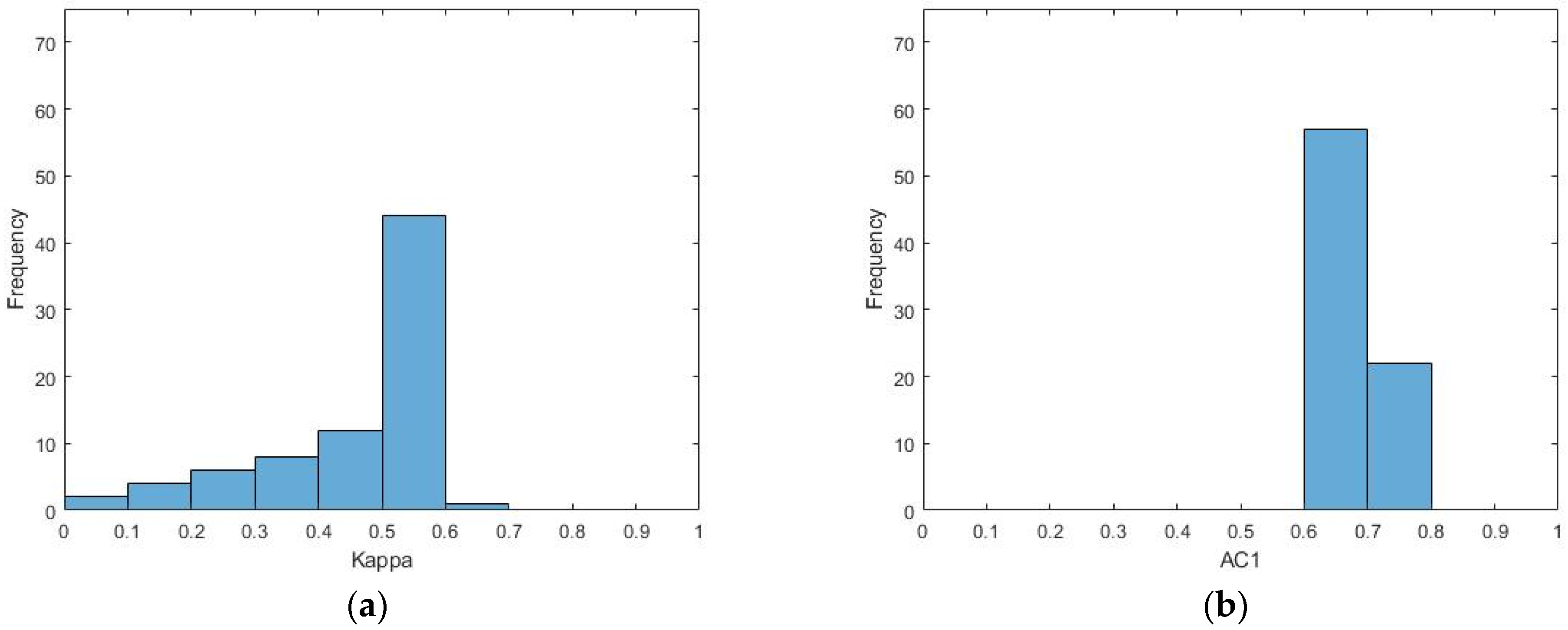

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

Medición del Acuerdo entre Codificadores: Por qué el Kappa de Cohen no es una buena opción - ATLAS.ti | El software nº 1 para el análisis cualitativo de datos

PDF) Assessing the accuracy of species distribution models: prevalence, kappa and the true skill statistic (TSS) | Bin You - Academia.edu

Medición del Acuerdo entre Codificadores: Por qué el Kappa de Cohen no es una buena opción - ATLAS.ti | El software nº 1 para el análisis cualitativo de datos

The comparison of kappa and PABAK with changes of the prevalence of the... | Download Scientific Diagram